CS 4973: Bias and Ethical Implications in Artificial Intelligence

Instructor: Malihe Alikhani

Time and Place: Tue & Fri 3:25-5:05 pm

Prospects on Ethics of AI

Benjamin Kuipers. 2020.

Perspectives on Ethics of AI: Computer Science.

To appear in Markus Dubber, Frank Pasquale, and Sunit Das (Eds.),

Oxford Handbook of Ethics of AI, Oxford University Press, 2020.

Welcome!

I'm very excited to welcome you to this new advanced AI ethics class.

Artificial intelligence holds tremendous promise to benefit nearly all aspects of society, including healthcare, food production, economy, education, security, the law, and even our personal activities. The development of AI is creating new opportunities to improve the lives of people around the world. At the same time, these intelligent models may incorporate existing biases or create new biases that can seriously harm society. At its worst, AI can exacerbate misguided old practices and aggravate past social harms with its unprecedented processing powers and the veneer of seemingly objectivity, as humans in various social factions are disparately impacted by the AI-aided decisions.

Facing the ethical implications of AI, students need to be prepared with the critical intellectual capacities that allow them to understand and deal with these ethical challenges. These capacities comprise multi-disciplinary concepts ranging from statistical learning theories, model design, and ethical foundation, to psychological and cultural frameworks that are necessary for successfully navigating and evaluating responsible AI practices. Further, “ethical competence” will involve understanding various challenges surrounding AI, such as ethical regulation, fairness assessment, and interpretability of models.

Note that this is a technical class. Our focus will be on designing, evaluating, and mitigating bias in machine learning models. You will have three programming assignments in Python.

Students will be introduced to real-world applications of AI and the potential ethical implications associated with them. We will discuss the philosophical foundations of ethical research along with advanced state-of-the-art techniques. Discussion topics include:

-

Prospects on ethics of AI

-

Ethical dilemmas of AI

-

The case against AI

-

Fairness in machine learning

-

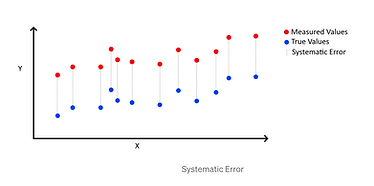

Bias in data

-

Ethical machine learning in health

-

NLP as a tool for detecting stereotypes

-

Blackbox algorithms and epistemic opacity

-

Fairness in RL

Students are required to demonstrate AI for good in action with a mini-project and write a critique on current codes of ethics for the machine learning community. I've drawn on related classes such as Emily Bender's Ethics in NLP.

The detailed schedule of the class is posted on the course Canvas webpage. The following is the list of suggested reading.

Ethical dilemmas of AI

Artificial Intelligence: examples of ethical dilemmas, UNESCO

Hagendorff, T. (2020). The Ethics of AI Ethics: An Evaluation of Guidelines. Minds and Machines.

Never mind: the case against artificial intelligence

"Understanding, Orientations, and Objectivity", Winograd, 2002

Fairness in machine learning–classification

Barocas, Solon, Moritz Hardt, and Arvind Narayanan. "Fairness in machine learning." NIPS Tutorial 1 (2017), Page 49-56

Fairness in machine learning–causality

Barocas, Solon, Moritz Hardt, and Arvind Narayanan. "Fairness in machine learning." NIPS Tutorial 1 (2017), Page 79-90.

Where does the data come from?

Timnit Gebru, Jamie Morgenstern, Briana Vecchione, Jennifer Wortman Vaughan, Hanna Wallach, Hal Daumé III, Kate Crawford. 2020. Datasheets for Datasets.

Exclusion/discrimination/bias in data

Casey Fiesler and Nicholas Proferes. 2018. “Participant” Perceptions of Twitter Research Ethics. Social Media + Society, 4(1). 22

Sometimes it's unethical *not* to run an experiment

Chen, Irene Y., et al. "Ethical machine learning in health." arXiv preprint arXiv:2009.10576 (2020).

Value sensitive design

Friedman, B., Hendry, D. G., Borning, A., et al. (2017). A survey of value-sensitive design methods. Foundations and Trends in Human-Computer Interaction, Chapter 3.

NLP as a tool for detecting stereotypes

Elliott Ash, Daniel L. Chen, Arianna Ornaghi. 2020. Stereotypes in High-Stakes Decisions: Evidence from U.S. Circuit Courts. NBER Manuscript.

Gonen, Hila, and Yoav Goldberg. 2019. "Lipstick on a pig: Debiasing methods cover up systematic gender biases in word embeddings but do not remove them." Proceedings of NAACL-HLT 2019.

Ethical issues in chatbots

Curry, Amanda Cercas, and Verena Rieser. 2018. "# MeToo Alexa: How Conversational Systems Respond to Sexual Harassment." In Proceedings of the Second ACL Workshop on Ethics in Natural Language Processing, pp. 7-14. 2018

Black-box algorithms and epistemic opacity

Carabantes, Manuel. "Black-box artificial intelligence: an epistemological and critical analysis." AI & SOCIETY (2019): 1-9.

Fairness in reinforcement learning

Jabbari, Shahin, et al. "Fairness in reinforcement learning." International Conference on Machine Learning. PMLR, 2017.

Course Requirements and Grading

Research paper presentations

Students will be required to give one paired (given by a team of two students) research paper presentation. The class has a Slack workspace that can help you get in touch with your classmates easily. We will read one paper every week.

A paper presentation should be about 20 minutes and will be followed by 15 minutes of class discussion led by the presenters. The presentation should first summarize the content of the paper, clearly presenting: 1) What problem or task does the paper study? 2) What is the motivation for this problem/task, i.e., why is it important? 3) What is the novel algorithm/approach to this problem that the paper proposes? 4) How is this method evaluated, i.e. what is the experimental methodology, what data is utilized, and what performance metrics are used? 5) What are the basic results and conclusions of the paper? Finally, the presentation should conclude with the presenter's critique of the paper including 1) Are there any reasons to question the motivation and importance of the problem studied? 2) Are there any limitations/weaknesses to the proposed approach to this problem? 3) Are there any limitations/weaknesses to the evaluation methodology and/or results? 4) What are some promising future research directions following up on this work? For the paired presentation, the two presenters can decide how to divide the work of the presentation among themselves.

Paper critiques

Each student should submit six paper critiques on Slack. Pick six of the papers discussed in class and write a one-page critique of the paper. Use the critique questions listed above for the oral presentations to guide your discussion, but there is no need to address all of these questions in a given critique. Submit a nicely formated 11pt font PDF.

Programming assignment

The programming assignment is posted on Canvas.

Final research project

Final projects should ideally be done by teams of two students. Projects done by one or three students are possible on rare occasions with prior approval of the instructor. Each student is responsible for finding a partner for their final project. Feel free to post a message on Slack about your interests in order to find a partner with similar interests.

Submit a one-page project proposal by January 31 that briefly covers the first 4 questions for research paper presentations (see above). I will provide feedback on these proposals, but they will not be graded. Students are encouraged to discuss project proposals with me during office hours before submitting them. Groups are required to give a 15-minute presentation about their progress on the final project in the 10th week of the class. During the last week of class, each team will be required to give a 15-minute presentation on the current state of their project, and lead a 10-minute discussion of their project. Use the same format as the paired research paper presentations (see above), but you may have only preliminary actual results at that time to present. Submit the code to the GitHub repo for the class at midnight on April 18.

Final grade

The final grade will be computed as follows:

20% Class participation

10% Paired research paper presentation

10% Midterm

20% Programming assignment

40% Final project

(Extra credit) a critique on current codes of ethics for the machine learning

Academic integrity

All assignment submissions must be the sole work of each individual student. Students may not read or copy another student's solutions or share their own solutions with other students. Students may not review solutions from students who have taken the course in previous years. Submissions that are substantively similar will be considered cheating by all students involved, and as such, students must be mindful not to post their code publicly. The use of books and online resources is allowed, but must be credited in submissions, and material may not be copied verbatim. Any use of electronics or other resources during an examination will be considered cheating.

If you have any doubts about whether a particular action may be construed as cheating, ask the instructor for clarification before you do it. The instructor will make the final determination of what is considered cheating.

Cheating in this course will result in a grade of F for the course and may be subject to further disciplinary action.

Using an open-source codebase is accepted, but you must explicitly cite the source, especially following the owner's guideline if it exists. For any writing involved in the project, plagiarism is strictly prohibited. If you are unclear whether your work will be considered as plagiarism, ask the instructor before submitting or presenting the work

Students with disabilities

If you have a disability for which you are or may be requesting an accommodation, you are encouraged to contact both your instructor and Disability Resources and Services at https://drc.sites.northeastern.edu/.

Audio/video recordings

To ensure the free and open discussion of ideas, students may not record classroom lectures, discussions, and/or activities without the advance written permission of the instructor, and any such recording properly approved in advance can be used solely for the student's own private use. Since this is a seminar class and meetings are all online, students are required to use their cameras during the class.

Copyrighted materials

All material provided through this web site is subject to copyright. This applies to class/recitation notes, slides, assignments, solutions, project descriptions, etc. You are allowed (and expected!) to use all the provided material for personal use. However, you are strictly prohibited from sharing the material with others in general and from posting the material on the Web or other file sharing venues in particular.

Diversity and Inclusion

Northeastern University does not tolerate any form of discrimination, harassment, or retaliation based on disability, race, color, religion, national origin, ancestry, genetic information, marital status, familial status, sex, age, sexual orientation, veteran status or gender identity or other factors as stated in the University’s Title IX policy. The University is committed to taking prompt action to end a hostile environment that interferes with the University’s mission. For more information about policies, procedures, and practices, see:https://diversity.northeastern.edu/